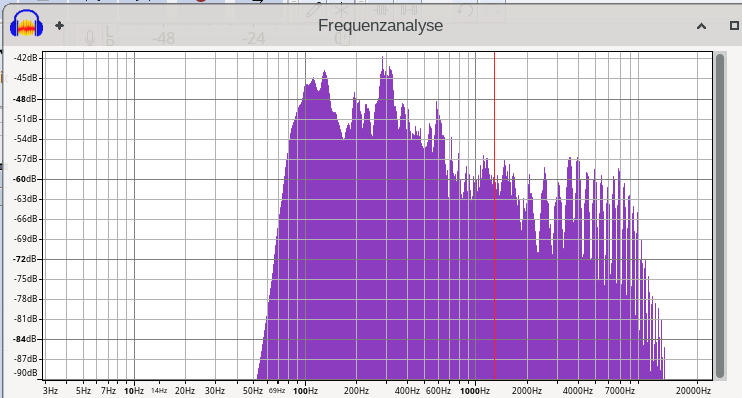

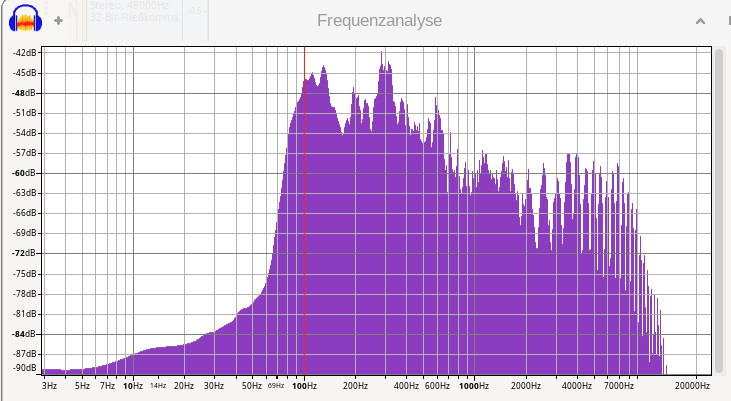

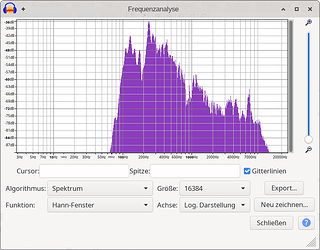

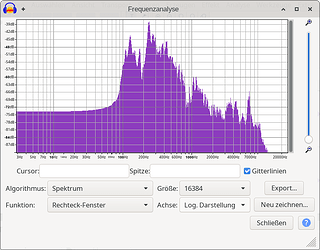

Who says that it’s “useless stuff”? If it’s not actually audible noise like power supply hum or something else undesirable you can hear, then it’s more likely to be high end (beat) harmonics of sounds you do want, and removing them is just going to distort its colour and timbre and depth and make it seem hollower to anyone listening with good ears and good quality equipment.

The most pleasant sounds are very rarely “clean”

You don’t say what codec you’re using in WAV, so I’m assuming it’s most probably uncompressed 16-bit PCM - which means the reconstruction will be “perfect” - garbage in, garbage out. Whatever damage you did with filtering out parts of the available bandwidth will be perfectly retained in the reconstruction (but your listening equipment (and the environment around it!) is almost certainly still going to re-introduce sounds across the whole spectrum in the reproduction, Because Physics).

AAC on the other hand, which is the usual default to pair with H.26x video in mp4 containers is a lossy codec. Which means much of its compression comes from “intelligently” discarding information that most people won’t be able to hear anyway, and reconstructing what it does keep to sound as imperceptibly different from the original as possible.

So there’s no sample plot you can look at to decide whether or not it did that well, the only way to know is to listen to it. And with most modern lossy codecs worth their salt, once you get above about 64kb/s per channel, even people with really good ears and really good equipment become uncertain enough about whether they can actually hear a difference that the only way to be sure is blind ABX testing what they hear.

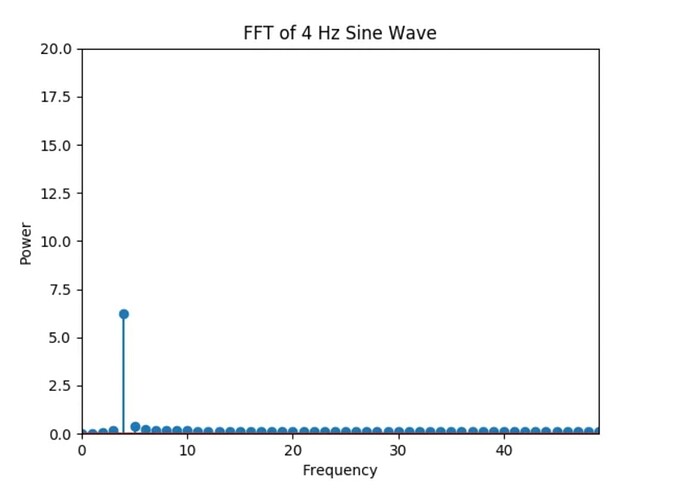

There’s even cases where it’s highly beneficial to add noise. Look into dithering to see how carefully selected “random” noise can greatly improve the perceptual quality of reconstructed audio and video - and can in fact even increase the real dynamic range well beyond what might naively be calculated from the number of bits per sample in the reconstruction.

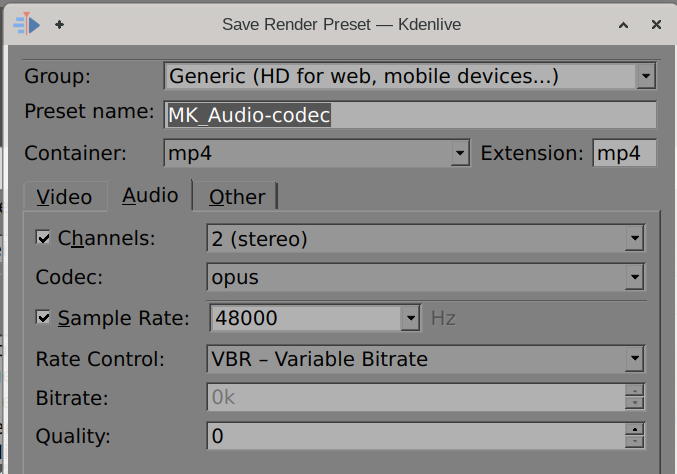

If you want to optimise for quality in compressed A/V, start by using the best available codec (which for audio would be Opus), don’t filter anything you can’t hear that you wish you couldn’t, don’t use a higher bitrate than you can actually hear a desirable improvement if you increase it, and don’t scare yourself unnecessarily by trying to look at what is in the sausage to judge its quality. Just worry about how it actually tastes, it was the codec designer’s problem to ensure there’s nothing toxic in there, not the end user’s.

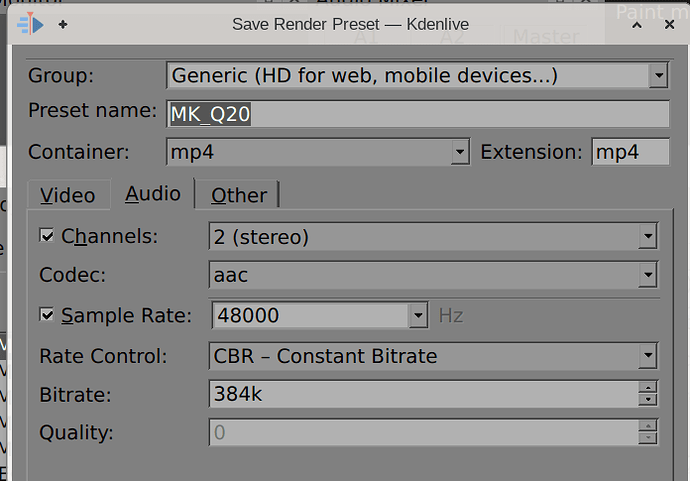

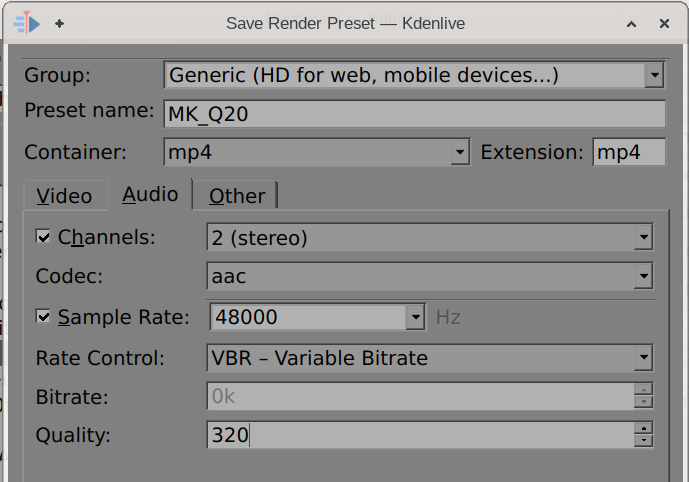

As for CBR vs VBR - about the only use case for CBR in a modern codec is streaming with very low latency when the amount of bandwidth available is guaranteed but strictly limited.

In pretty much every other case, in any codec worth considering, VBR will be superior. Because it will save bits that CBR would be forced to “waste” on very simple to encode segments, and have the ability to spend those saved bits on the very difficult to encode segments, improving their perceptual quality while still preserving an “average” bitrate over the whole corpus lower than what would be needed to get that quality with CBR.

With single pass encoding that means some samples will use more bits than CBR at the “same rate” and some will use less, since the bitrate was calibrated over a large corpus. If you care about size and it’s “too large”, drop the rate a bit until you get the balance of size and quality you want.

There’s intermediate methods of rate limiting, like “constrained VBR” which puts a hard cap on the size of the largest possible packet - but they are all trading quality for hard bandwidth limiting.

For “master” audio that you want to process and edit, use uncompressed PCM in WAV or similar, or a lossless codec like FLAC. Else you’ll get new degradation every time you transcode lossy to lossy. But for the highest quality in the smallest size for “final” renders, you’re very rarely going to need more than Opus at 128kb/s (for 2 channel audio), and even at lower rates than that, most people won’t be able to tell the difference in most cases from your original lossless audio.

Don’t try to voodoo this like many people do. Listen to it and trust what your ears actually hear.