Hi

Hello for everyone. ![]()

How are you? I hope you are fine.

Please, can you help me?

The issue

Before… Yes, I went to the settings and adjust whole necessary to use “speech to text” feature.

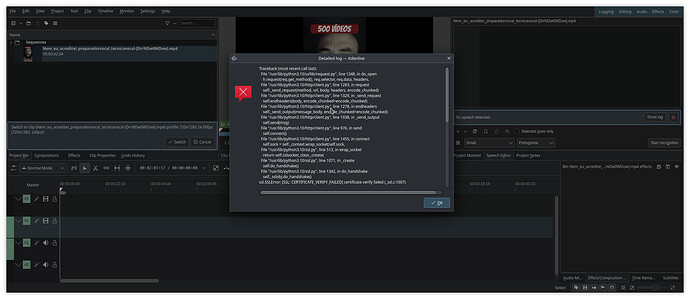

KDEnlive App Image distribution (seems) can’t download Whisper models when you hit the “Start recognition”. After some seconds, this show a message “No speech detected” and a button for “Show log”.

Log when try "start the recognition" (with "Large" model)

Traceback (most recent call last):

File "/usr/lib/python3.10/urllib/request.py", line 1348, in do_open

h.request(req.get_method(), req.selector, req.data, headers,

File "/usr/lib/python3.10/http/client.py", line 1283, in request

self._send_request(method, url, body, headers, encode_chunked)

File "/usr/lib/python3.10/http/client.py", line 1329, in _send_request

self.endheaders(body, encode_chunked=encode_chunked)

File "/usr/lib/python3.10/http/client.py", line 1278, in endheaders

self._send_output(message_body, encode_chunked=encode_chunked)

File "/usr/lib/python3.10/http/client.py", line 1038, in _send_output

self.send(msg)

File "/usr/lib/python3.10/http/client.py", line 976, in send

self.connect()

File "/usr/lib/python3.10/http/client.py", line 1455, in connect

self.sock = self._context.wrap_socket(self.sock,

File "/usr/lib/python3.10/ssl.py", line 513, in wrap_socket

return self.sslsocket_class._create(

File "/usr/lib/python3.10/ssl.py", line 1071, in _create

self.do_handshake()

File "/usr/lib/python3.10/ssl.py", line 1342, in do_handshake

self._sslobj.do_handshake()

ssl.SSLError: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:1007)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/tmp/.mount_kdenliy36f99/usr/share/kdenlive/scripts/whispertotext.py", line 100, in <module>

sys.exit(main())

File "/tmp/.mount_kdenliy36f99/usr/share/kdenlive/scripts/whispertotext.py", line 84, in main

result = run_whisper(source, model, device, task, language)

File "/tmp/.mount_kdenliy36f99/usr/share/kdenlive/scripts/whispertotext.py", line 53, in run_whisper

model = whisper.load_model(model, device)

File "/home/matheus/.local/lib/python3.10/site-packages/whisper/__init__.py", line 133, in load_model

checkpoint_file = _download(_MODELS[name], download_root, in_memory)

File "/home/matheus/.local/lib/python3.10/site-packages/whisper/__init__.py", line 69, in _download

with urllib.request.urlopen(url) as source, open(download_target, "wb") as output:

File "/usr/lib/python3.10/urllib/request.py", line 216, in urlopen

return opener.open(url, data, timeout)

File "/usr/lib/python3.10/urllib/request.py", line 519, in open

response = self._open(req, data)

File "/usr/lib/python3.10/urllib/request.py", line 536, in _open

result = self._call_chain(self.handle_open, protocol, protocol +

File "/usr/lib/python3.10/urllib/request.py", line 496, in _call_chain

result = func(*args)

File "/usr/lib/python3.10/urllib/request.py", line 1391, in https_open

return self.do_open(http.client.HTTPSConnection, req,

File "/usr/lib/python3.10/urllib/request.py", line 1351, in do_open

raise URLError(err)

urllib.error.URLError: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:1007)>

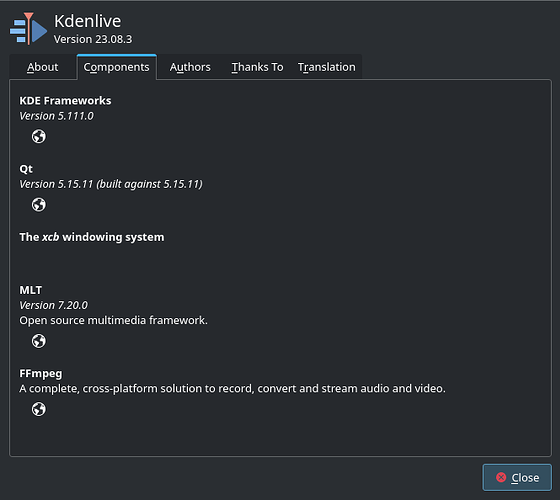

Testing the KDEnlive from Ubuntu Repositories

Then I downloaded the software from Ubuntu repositories. I went to the configure panel to check if the “speech to text” was set up. I clicked to “Check configuration” and it updaded the torch package

You can check out the log...

Defaulting to user installation because normal site-packages is not writeable

Requirement already satisfied: torch in ./.local/lib/python3.10/site-packages (1.12.1)

Collecting torch

Downloading torch-2.1.1-cp310-cp310-manylinux1_x86_64.whl.metadata (25 kB)

Requirement already satisfied: openai-whisper in ./.local/lib/python3.10/site-packages (20231117)

Requirement already satisfied: srt in ./.local/lib/python3.10/site-packages (3.5.3)

Requirement already satisfied: filelock in ./.local/lib/python3.10/site-packages (from torch) (3.13.1)

Requirement already satisfied: typing-extensions in ./.local/lib/python3.10/site-packages (from torch) (4.8.0)

Requirement already satisfied: sympy in ./.local/lib/python3.10/site-packages (from torch) (1.12)

Requirement already satisfied: networkx in ./.local/lib/python3.10/site-packages (from torch) (3.2.1)

Requirement already satisfied: jinja2 in ./.local/lib/python3.10/site-packages (from torch) (3.1.2)

Requirement already satisfied: fsspec in ./.local/lib/python3.10/site-packages (from torch) (2023.10.0)

Requirement already satisfied: nvidia-cuda-nvrtc-cu12==12.1.105 in ./.local/lib/python3.10/site-packages (from torch) (12.1.105)

Requirement already satisfied: nvidia-cuda-runtime-cu12==12.1.105 in ./.local/lib/python3.10/site-packages (from torch) (12.1.105)

Requirement already satisfied: nvidia-cuda-cupti-cu12==12.1.105 in ./.local/lib/python3.10/site-packages (from torch) (12.1.105)

Requirement already satisfied: nvidia-cudnn-cu12==8.9.2.26 in ./.local/lib/python3.10/site-packages (from torch) (8.9.2.26)

Requirement already satisfied: nvidia-cublas-cu12==12.1.3.1 in ./.local/lib/python3.10/site-packages (from torch) (12.1.3.1)

Requirement already satisfied: nvidia-cufft-cu12==11.0.2.54 in ./.local/lib/python3.10/site-packages (from torch) (11.0.2.54)

Requirement already satisfied: nvidia-curand-cu12==10.3.2.106 in ./.local/lib/python3.10/site-packages (from torch) (10.3.2.106)

Requirement already satisfied: nvidia-cusolver-cu12==11.4.5.107 in ./.local/lib/python3.10/site-packages (from torch) (11.4.5.107)

Requirement already satisfied: nvidia-cusparse-cu12==12.1.0.106 in ./.local/lib/python3.10/site-packages (from torch) (12.1.0.106)

Requirement already satisfied: nvidia-nccl-cu12==2.18.1 in ./.local/lib/python3.10/site-packages (from torch) (2.18.1)

Requirement already satisfied: nvidia-nvtx-cu12==12.1.105 in ./.local/lib/python3.10/site-packages (from torch) (12.1.105)

Requirement already satisfied: triton==2.1.0 in ./.local/lib/python3.10/site-packages (from torch) (2.1.0)

Requirement already satisfied: nvidia-nvjitlink-cu12 in ./.local/lib/python3.10/site-packages (from nvidia-cusolver-cu12==11.4.5.107->torch) (12.3.101)

Requirement already satisfied: more-itertools in /usr/lib/python3/dist-packages (from openai-whisper) (8.10.0)

Requirement already satisfied: numba in ./.local/lib/python3.10/site-packages (from openai-whisper) (0.58.1)

Requirement already satisfied: numpy in ./.local/lib/python3.10/site-packages (from openai-whisper) (1.26.2)

Requirement already satisfied: tiktoken in ./.local/lib/python3.10/site-packages (from openai-whisper) (0.5.1)

Requirement already satisfied: tqdm in ./.local/lib/python3.10/site-packages (from openai-whisper) (4.66.1)

Requirement already satisfied: MarkupSafe>=2.0 in ./.local/lib/python3.10/site-packages (from jinja2->torch) (2.1.3)

Requirement already satisfied: llvmlite<0.42,>=0.41.0dev0 in ./.local/lib/python3.10/site-packages (from numba->openai-whisper) (0.41.1)

Requirement already satisfied: mpmath>=0.19 in ./.local/lib/python3.10/site-packages (from sympy->torch) (1.3.0)

Requirement already satisfied: regex>=2022.1.18 in ./.local/lib/python3.10/site-packages (from tiktoken->openai-whisper) (2023.10.3)

Requirement already satisfied: requests>=2.26.0 in ./.local/lib/python3.10/site-packages (from tiktoken->openai-whisper) (2.31.0)

Requirement already satisfied: charset-normalizer<4,>=2 in ./.local/lib/python3.10/site-packages (from requests>=2.26.0->tiktoken->openai-whisper) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in ./.local/lib/python3.10/site-packages (from requests>=2.26.0->tiktoken->openai-whisper) (3.4)

Requirement already satisfied: urllib3<3,>=1.21.1 in ./.local/lib/python3.10/site-packages (from requests>=2.26.0->tiktoken->openai-whisper) (2.1.0)

Requirement already satisfied: certifi>=2017.4.17 in ./.local/lib/python3.10/site-packages (from requests>=2.26.0->tiktoken->openai-whisper) (2023.11.17)

Downloading torch-2.1.1-cp310-cp310-manylinux1_x86_64.whl (670.2 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 670.2/670.2 MB 4.9 MB/s eta 0:00:00

Installing collected packages: torch

Attempting uninstall: torch

Found existing installation: torch 1.12.1

Uninstalling torch-1.12.1:

Successfully uninstalled torch-1.12.1

Successfully installed torch-2.1.1

Updating packages: {'torch', 'openai-whisper', 'srt'}

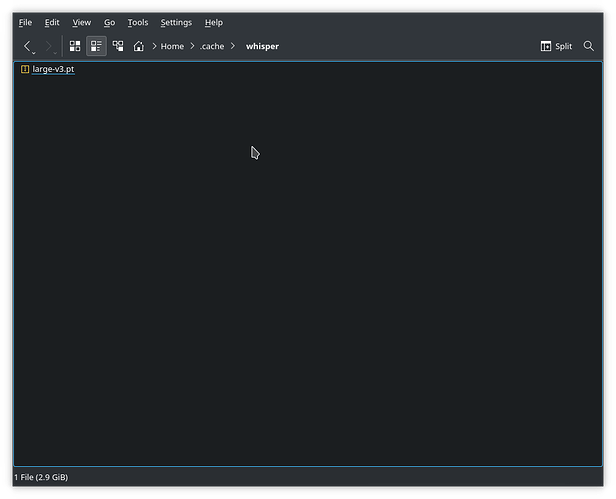

So when I hit the “Start recognition” button again, it worked how suppose should. I came back to App Image and now It also works, since you set to use the “Large” model, who is I download in repositorie format. The models are storaged in ~/.cache/whisper .

Coming back to App Image…

Still in App Image format, when you select other models, like “Small”, the same error back to happen again, it changing lightly the from the following line:

Traceback (most recent call last):

File "/tmp/

Log when try "start the recognition" (with "Small" model)

Traceback (most recent call last):

File "/usr/lib/python3.10/urllib/request.py", line 1348, in do_open

h.request(req.get_method(), req.selector, req.data, headers,

File "/usr/lib/python3.10/http/client.py", line 1283, in request

self._send_request(method, url, body, headers, encode_chunked)

File "/usr/lib/python3.10/http/client.py", line 1329, in _send_request

self.endheaders(body, encode_chunked=encode_chunked)

File "/usr/lib/python3.10/http/client.py", line 1278, in endheaders

self._send_output(message_body, encode_chunked=encode_chunked)

File "/usr/lib/python3.10/http/client.py", line 1038, in _send_output

self.send(msg)

File "/usr/lib/python3.10/http/client.py", line 976, in send

self.connect()

File "/usr/lib/python3.10/http/client.py", line 1455, in connect

self.sock = self._context.wrap_socket(self.sock,

File "/usr/lib/python3.10/ssl.py", line 513, in wrap_socket

return self.sslsocket_class._create(

File "/usr/lib/python3.10/ssl.py", line 1071, in _create

self.do_handshake()

File "/usr/lib/python3.10/ssl.py", line 1342, in do_handshake

self._sslobj.do_handshake()

ssl.SSLError: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:1007)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/tmp/.mount_kdenlidT2SkE/usr/share/kdenlive/scripts/whispertotext.py", line 100, in <module>

sys.exit(main())

File "/tmp/.mount_kdenlidT2SkE/usr/share/kdenlive/scripts/whispertotext.py", line 84, in main

result = run_whisper(source, model, device, task, language)

File "/tmp/.mount_kdenlidT2SkE/usr/share/kdenlive/scripts/whispertotext.py", line 53, in run_whisper

model = whisper.load_model(model, device)

File "/home/matheus/.local/lib/python3.10/site-packages/whisper/__init__.py", line 133, in load_model

checkpoint_file = _download(_MODELS[name], download_root, in_memory)

File "/home/matheus/.local/lib/python3.10/site-packages/whisper/__init__.py", line 69, in _download

with urllib.request.urlopen(url) as source, open(download_target, "wb") as output:

File "/usr/lib/python3.10/urllib/request.py", line 216, in urlopen

return opener.open(url, data, timeout)

File "/usr/lib/python3.10/urllib/request.py", line 519, in open

response = self._open(req, data)

File "/usr/lib/python3.10/urllib/request.py", line 536, in _open

result = self._call_chain(self.handle_open, protocol, protocol +

File "/usr/lib/python3.10/urllib/request.py", line 496, in _call_chain

result = func(*args)

File "/usr/lib/python3.10/urllib/request.py", line 1391, in https_open

return self.do_open(http.client.HTTPSConnection, req,

File "/usr/lib/python3.10/urllib/request.py", line 1351, in do_open

raise URLError(err)

urllib.error.URLError: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:1007)>

Culprit!

After all… Are there some solution to this issue? It is so weird, I thought that was the packages comes with the KDE Neon, but as soons as I the KDEnlive downloaded from Ubuntu repositories and the recognition worked, it seem that the App Image is the culprit.

- The almost same issue reported in KDEnlive’s subreddit.

- Other almost same issue reported in Ask Ubuntu, but only related with Whisper.

I tried run this command in terminal, but the "Start recognition" KDEnlive error kept the same.

pip install --upgrade certifi

sudo update-ca-certificates --fresh

export SSL_CERT_DIR=/etc/ssl/certs

Workaround

A workaround is you download the model and paste in ~/.cache/whisper.

The link to the model are available here.

tiny.en

tiny

base.en

base

small.en

small

medium.en

medium

large-v1

large-v2

large-v3

Hardware and O.S. specs

Operating System: KDE neon 5.27

KDE Plasma Version: 5.27.9

KDE Frameworks Version: 5.111.0

Qt Version: 5.15.11

Kernel Version: 6.2.0-37-generic (64-bit)

Graphics Platform: X11

Processors: 12 × AMD Ryzen 5 3600 6-Core Processor

Memory: 15.5 GiB of RAM

Graphics Processor: AMD Radeon RX 570 Series

Manufacturer: Gigabyte Technology Co., Ltd.

Product Name: B450M DS3H

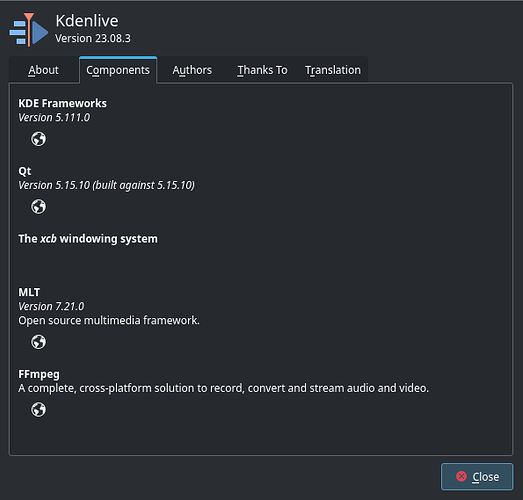

KDEnlive specs

App Image

KDE Frameworks

Version 5.111.0

Qt

Version 5.15.10 (built against 5.15.10)

The xcb windowing system

MLT

Version 7.21.0

Open source multimedia framework.